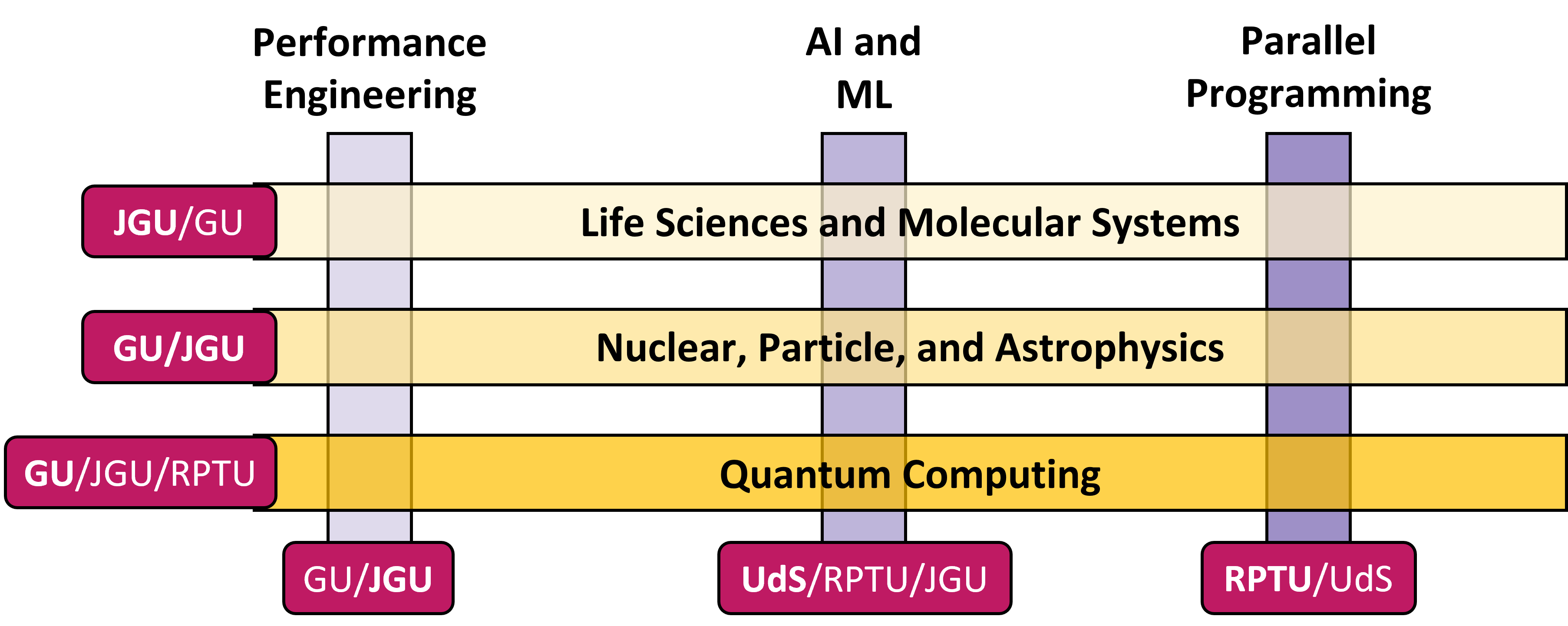

NHR South-West is a strategic collaboration between

- Goethe University Frankfurt (GU),

- Johannes Gutenberg University Mainz (JGU),

- University of Kaiserslautern-Landau (RPTU) and

- Saarland University (UdS),

and is part of the German National High Performance Computing (NHR) Alliance.

By combining the strengths of its partners in High Performance Computing (HPC) and Artificial Intelligence (AI), NHR South-West provides researchers with access to cutting-edge HPC systems and services. Our teams work closely with domain scientists in Simulation and Data Labs, while the Method Labs focus on developing innovative computational methods.

Core and Research Competencies